What AI Actually Is

(And Why CES Just Told You Everything)

The biggest tech conference in the world just revealed something most people missed.

At CES last week, Lenovo’s CEO announced that AI spending is flipping: from 80% training / 20% inference to the opposite. By 2026, two-thirds of all AI computing will be inference - taking everything the system learned and applying it to new situations.

If that sounds like jargon, here’s what it actually means for you:

AI is an inference engine. That’s it. That’s the whole thing.

When you ask ChatGPT a question, it’s not “thinking.” It’s running inference…calculating the statistically most probable next word, then the next, then the next. It learned patterns from billions of documents. Now it applies those patterns to your prompt. Training was the homework. Inference is the exam, taken billions of times per day.

What you get back is “the next most likely step.” AI doesn’t understand your question. It predicts what words usually follow your words, based on everything it’s seen before.

That’s incredibly powerful for some things. A self-driving car recognizing a stop sign? Inference. A fraud detection system flagging an unusual transaction? Inference. Your email suggesting “Sounds good!” as a reply? Inference.

Pattern in. Pattern out. At scale. At speed.

Here’s what the industry shift tells us:

The hard part - building the pattern libraries - is maturing. The money is now flowing toward deploying those patterns everywhere. Into your phone. Your retail store. Your hospital. Your factory. Inference is AI’s “working phase,” and we’re entering it full throttle.

But notice what’s missing.

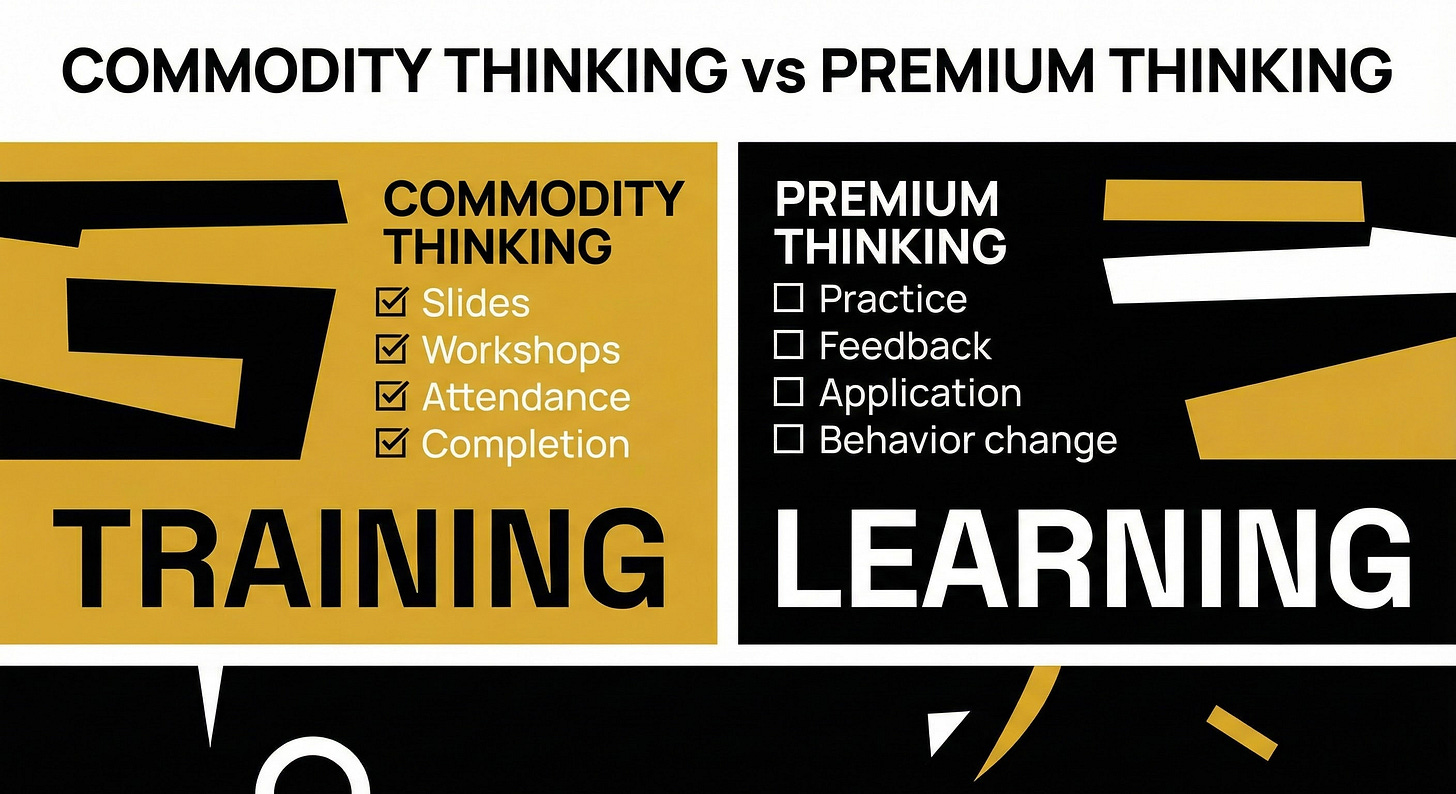

Look at the graphic above. Organizations love checking boxes: slides delivered, workshops attended, completion certificates issued. That’s corporate training. But training doesn’t produce behavior change. It doesn’t build judgment. It doesn’t create the ability to handle situations that weren’t in the curriculum.

AI has the same limitation, just faster.

An AI system can complete its training perfectly - billions of parameters, trillions of tokens - and still only do inference. It applies what it learned. It doesn’t learn from what it applies. When you use ChatGPT, it doesn’t remember you. It doesn’t grow. It doesn’t develop judgment about your situation. Every response is inference on a static model.

What this means for your thinking:

The skills that AI does beautifully - recall, pattern-matching, producing the probable response - are becoming commodity infrastructure. The tech giants are building inference servers the way previous generations built electrical grids. Power on demand.

That leaves the other side of the graphic. The empty boxes.

Practice. Feedback. Application. Behavior change.

These require something inference can’t do: updating the model based on what happens next. Evaluating whether the pattern should apply here. Creating something the pattern wouldn’t predict. Premium Thinking only a human can do.

Inference gives you the most likely answer. Sometimes you need the right answer and those aren’t always the same thing.

AI is an extraordinary tool for executing patterns at scale. The CES news just confirmed the entire economy is tooling up to do exactly that. What it’s not tooling up for is the thinking that happens when patterns aren’t enough.

That thinking still requires a human. Premium Thinking.

And it still has to be learned.

The industry calls it inference. I call it the commodity side of cognition. The premium isn’t in applying patterns—it’s in knowing when to break them.

It's taking me a bit to wrap my head around the long-term impact from this, Rich! It's huge. I love this line: "The premium isn’t in applying patterns—it’s in knowing when to break them."

Here's to breaking more patterns ahead!

Commodity va premium thinking was a great graphic